Indian elephant bull in musth in Bandipur National Park – credit Yathin S Krishnappa CC 3.0. Indian elephant bull in musth in Bandipur National Park – credit Yathin S Krishnappa CC 3.0.Rather than taking their software and programming degrees into the tech sector, two young Kerala men are using them to bring India’s efforts to track, prevent, and punish wildlife crime into the 21st century with a suite of sophisticated apps and tools. They believe they are the first to bring this level of digitization into wildlife conservation, allowing courts to rapidly process wildlife crime cases, rangers to track and analyze patterns of criminal activity in forests, and much more. Paper records, written by hand, recorded by memory, are the kind of data that so many ranger teams and criminal prosecutors of wildlife crime have to rely on around the world in the course of their noble work, and India is no exception. “I realized how there is a gap in the market. There is almost zero technology to track any kind of wildlife crime in India,” said Allen Shaji, co-founder of Leopard Tech Labs. “Working with the Wildlife Trust of India and with their support, our company was able to make HAWK or ‘Hostile Activity Watch Kernel’ with the forest department of Kerala,” he says. Along with his college buddy and fellow cofounder Sobin Matthew, Leopard Tech Labs developed four unique programs now in use by the Kerala Forest Department, called Cyber HAWK, SARPA (Snake Awareness, Rescue and Protection App), Jumbo Radar, and WildWatch. “HAWK is an offense management system that includes case handling, court case monitoring, communication management, and wildlife death monitoring,” Allen told The Better India, explaining that before this, all casework was recorded on papers. Now, HAWK can quickly summarize vast amounts of data into various kinds of digital documents, like a Google spreadsheet, PDF, Microsoft Excel, etc. HAWK can surf the data inputs in seconds, enabling real-time answers to be generated while court or parliament is in session, whether that’s a spreadsheet on the year-over-year rate of elephant deaths, or a police report from the scene of a wildlife crime arrest or trafficking bust. HAWK is not only used by the authorities, but contains big datasets provided by the IUCN, the world’s largest wildlife conservation organization.  Sobin Mathew and Allen Shaji of Leopard Tech Labs – credit Leopard Tech Labs, released to The Better India Sobin Mathew and Allen Shaji of Leopard Tech Labs – credit Leopard Tech Labs, released to The Better IndiaIn addition to HAWK, Jumbo Radar allows the forest departments of India to track elephants in real-time in case they should depart a nature reserve, while WildWatch uses machine learning to predict future incidents of human-wildlife conflict before they happen. In particular, it uses seasonal movements of animals, past records of violence against wildlife, and data on crops including the amount of land cropped, the proximity to nature reserves, and when in the year humans are working on the boundaries of the cropped areas all to predict where conflicts will happen before they do. “This information allows for targeted interventions, such as advising villagers to relocate or alter crop cultivation practices, thereby mitigating conflicts and promoting coexistence,” Allen says. Already Leopard Tech Labs’ products are moving beyond Kerala to Tamil Nadu, and three tiger reserves have begun using their suite of solutions. Leopard Tech has even developed an app for Brazil—to help reduce human-snake conflict.Wildlife trafficking is the third-most lucrative illegal trade in the world, and nations with weak enforcement of environmental laws risk becoming hotbeds for poaching of far more than just elephants and rhinos. Rather Than Taking Jobs in Tech, 2 Young Software Engineers Use Talents to Crush Poaching in India |

Rather Than Taking Jobs in Tech, 2 Young Software Engineers Use Talents to Crush Poaching in India (2026-01-05T13:50:00+05:30)

New online tool to transform how high blood pressure is treated (2025-11-04T11:11:00+05:30)

IANS Photo IANS PhotoNew Delhi, (IANS): A global team of researchers from India, Australia, the US, and the UK has developed a novel online-based tool which can transform how hypertension is managed, allowing doctors to choose a treatment for each patient based on the degree to which they need to lower their blood pressure. The 'blood pressure treatment efficacy calculator' is built on data from nearly 500 randomised clinical trials in over 100,000 people. It allows doctors to see how different medications are likely to lower blood pressure. “We cannot overlook the importance of controlling high blood pressure effectively and efficiently. Achieving optimal control requires a clear understanding of the efficacy of antihypertensive drugs at different doses and in various combinations. Without clarity on what we want to achieve and how to achieve it, we will not meet our targets. Guidelines define the target blood pressure, while our online tool helps identify which antihypertensive drugs are best suited to reach that target,” said Dr. Mohammad Abdul Salam, from The George Institute for Global Health, Hyderabad. A single antihypertensive medication -- still the most common way treatment is started -- typically lowers systolic BP by just 8-9 mmHg, while most patients need reductions of 15-30 mmHg to reach ideal targets. Nelson Wang, cardiologist and Research Fellow at the Institute, noted that while the traditional way of doing this is by measuring blood pressure directly for each patient and adjusting treatment accordingly, BP readings are far too variable, or ‘noisy’, for it to be reliable. The new tool, described in research published in The Lancet, helps overcome this challenge by calculating the average treatment effect seen across hundreds of trials. It also categorises treatments as low, moderate, and high intensity, based on how much they lower blood pressure (BP) -- an approach already routinely used in cholesterol-lowering treatment. High blood pressure is one of the world’s biggest health challenges, affecting as many as 1.3 billion people and leading to around ten million deaths each year.Often called a silent killer as it does not cause any symptoms on its own, it can remain hidden until it leads to a heart attack, stroke, or kidney disease. Fewer than one in five people with hypertension have it under control. New online tool to transform how high blood pressure is treated | MorungExpress | morungexpress.com |

California Launches Free Behavioral Health Apps for Children, Young Adults, and Families (2025-10-17T10:45:00+05:30)

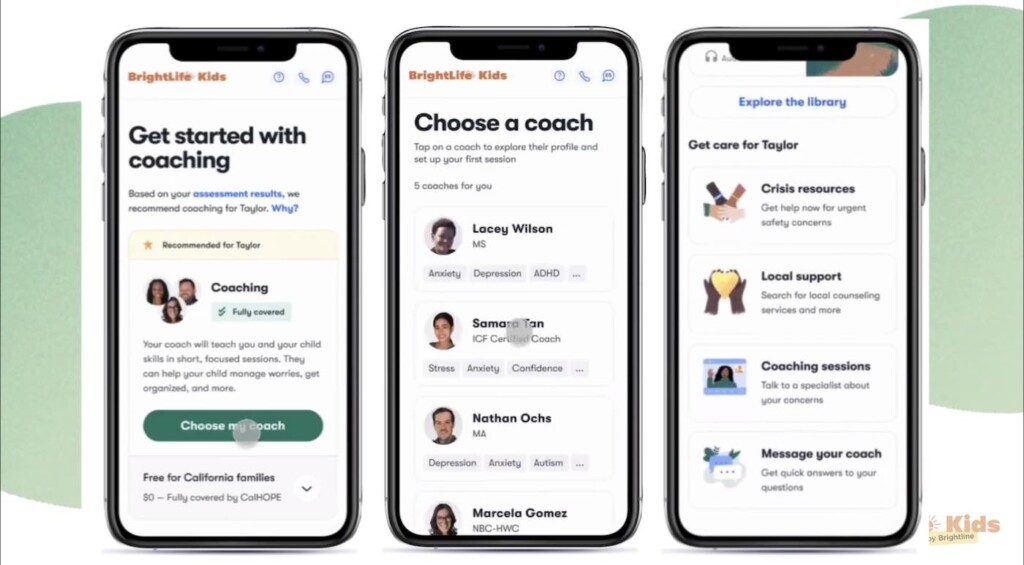

Photo by Annie Spratt Photo by Annie SprattCalifornia’s Department of Health Care Services launched two free behavioral health digital services for all families with kids, teens, and young adults up to age 25. The partnerships have been years in the making, as the state announced in 2021 the opportunity to collaborate on a new initiative to combat the youth mental health crisis. They selected two platforms, Brightline for young kids and Kooth‘s Soluna for young adults and teens to be the cornerstone of Governor Gavin Newsom’s Children and Youth Behavioral Health Initiative (CYBHI). The tools are free for all California families, regardless of income or health insurance. “About two-thirds of California kids with depression do not receive treatment. This platform will help meet the need by expanding access to critical behavioral health supports,” said Dr. Mark Ghaly, Secretary of the California Health & Human Services Agency. “Our young people will have an accessible option to get the help they need.” Between 2019 and 2021, about one-third of California adolescents experienced serious psychological distress, with a 20 percent increase in adolescent suicides. Meanwhile, the mental health provider shortage is causing longer wait times for appointments to community-based mental health providers. Availability is particularly limited among the uninsured and people with low incomes. “The Behavioral Health Virtual Services Platform will give access to services early on, reducing the likelihood of escalation to more serious conditions,” said Ghaly.  BrightLife Kids BrightLife KidsBoth web and app-based applications will offer coaching services in English and Spanish, as well as telephone-based coaching in all Medi-Cal threshold languages. The platforms include:

Both apps have strict privacy and confidentiality requirements and must adhere to all applicable state laws and regulations pertaining to privacy and security. Each app will also follow robust safety and risk escalation protocols. Trained behavioral health professionals will monitor app usage to identify potential risks, and licensed behavioral health professionals will be on standby to intervene, if clinically appropriate. Where to find the apps. BrightLife Kids is available for download on IOS devices in the Apple App Store. The app for Android devices will be available in the summer, but it’s also accessible from all devices—or by computer—at CalHOPE.org. The Soluna app for older youth is available for both IOS and Android devices in the Apple App Store and Google Play Store. California Launches Free Behavioral Health Apps for Children, Young Adults, and Families See a preview of what to expect in the videos below, starting with the younger kids… Check out the app for older kids… |

Australia set to ban ‘nudify’ apps. How will it work? (2025-09-08T10:51:00+05:30)

|

The Australian government has announced plans to ban “nudify” tools and hold tech platforms accountable for failing to prevent users from accessing them. This is part of the government’s overall strategy to move towards a “digital duty of care” approach to online safety. This approach places legal responsibility on tech companies to take proactive steps to identify and prevent online harms on their platforms and services. So how will the nudify ban happen in practice? And will it be effective? How are nudify tools being used?Nudify or “undress” tools are available on app stores and websites. They use artificial intelligence (AI) methods to create realistic but fake sexually explicit images of people. Users can upload a clothed, everyday photo which the tool analyses and then digitally removes the person’s clothing by putting their face onto a nude body (or what the AI “thinks” the person would look like naked). The problem is that nudify tools are easy to use and access. The images they create can also look highly realistic and can cause significant harms, including bullying, harassment, distress, anxiety, reputational damage and self-harm. These apps – and other AI tools used to generate image-based abuse material – are an increasing problem. In June this year, Australia’s eSafety Commissioner revealed that reports of deepfakes and other digitally altered images of people under 18 have more than doubled in the past 18 months. In the first half of 2024, 16 nudify websites that were named in a lawsuit issued by the San Francisco City Attorney David Chiu were visited more than 200 million times. In a July 2025 study, 85 nudify websites had a combined average of 18.5 million visitors for the preceding six months. Some 18 of the websites – which rely on tech services such as Google’s sign-on system, or Amazon and Cloudflare’s hosting or content delivery services – made between US$2.6 million and $18.4 million in the past six months. Aren’t nudify tools already illegal?For adults, sharing (or threatening to share) non-consensual deepfake sexualised images is a criminal offence under most Australian state, federal and territory laws. But aside from Victoria and New South Wales, it is not currently a criminal offence to create digitally generated intimate images of adults. For children and adolescents under 18, the situation is slightly different. It’s a criminal offence not only to share child sexual abuse material (including fictional, cartoon or fake images generated using AI), but also to create, access, possess and solicit this material. Developing, hosting and promoting the use of these tools for creating either adult or child content is not currently illegal in Australia. Last month, independent federal MP Kate Chaney introduced a bill that would make it a criminal offence to download, access, supply or offer access to nudify apps and other tools of which the dominant or sole purpose is the creation of child sexual abuse material. The government has not taken on this bill. It instead wants to focus on placing the onus on technology companies. How will the nudify ban actually work?Minister for Communications, Anika Wells, said the government will work closely with industry to figure out the best way to proactively restrict access to nudify tools. At this point, it’s unclear what the time frames are or how the ban will work in practice. It might involve the government “geoblocking” access to nudify sites, or directing the platforms to remove access (including advertising links) to the tools. It might also involve transparency reporting from platforms on what they’re doing to address the problem, including risk assessments for illegal and harmful activity. But government bans and industry collaboration won’t completely solve the problem. Users can get around geographic restrictions with VPNs or proxy servers. The tools can also be used “off the radar” via file-sharing platforms, private forums or messaging apps that already host nudify chatbots. Open-source AI models can also be fine-tuned to create new nudify tools. What are tech companies already doing?Some tech companies have already taken action against nudify tools. Discord and Apple have removed nudify apps and developer accounts associated with nudify apps and websites. Meta also bans adult content, including AI-generated nudes. However, Meta came under fire for inadvertently promoting nudify apps through advertisements – even though those ads violate the company’s standards. The company recently filed a lawsuit against Hong Kong nudify company CrushAI, after the company ran more than 87,000 ads across Meta platforms in violation of Meta’s rules on non-consensual intimate imagery. Tech companies can do much more to mitigate harms from nudify and other deepfake tools. For example, they can ensure guardrails are in place for deepfake generators, remove content more quickly, and ban or suspend user accounts. They can restrict search results and block keywords such as “undress” or “nudify”, issue “nudges” or warnings to people using related search terms, and use watermarking and provenance indicators to identify the origins of images. They can also work collaboratively together to share signals of suspicious activity (for example, advertising attempts) and share digital hashes (a unique code like a fingerprint) of known image-based abuse or child sexual abuse content with other platforms to prevent recirculation. Education is also keyPlacing the onus on tech companies and ensuring they are held accountable to reduce the harms from nudify tools is important. But it’s not going to stop the problem. Education must also be a key focus. Young people need comprehensive education on how to critically examine and discuss digital information and content, including digital data privacy, digital rights and respectful digital relationships. Digital literacy and respectful relationships education shouldn’t be based on shame and fear-based messaging but rather on affirmative consent. That means giving young people the skills to recognise and negotiate consent to receive, request and share intimate images, including deepfake images. We need effective bystander interventions. This means teaching bystanders how to effectively and safely challenge harmful behaviours and how to support victim-survivors of deepfake abuse. We also need well-resourced online and offline support systems so victim-survivors, perpetrators, bystanders and support persons can get the help they need. If this article has raised issues for you, call 1800RESPECT on 1800 737 732 or visit the eSafety Commissioner’s website for helpful online safety resources. You can also contact Lifeline crisis support on 13 11 14 or text 0477 13 11 14, Suicide Call Back Services on 1300 659 467, or Kids Helpline on 1800 55 1800 (for young people aged 5-25). If you or someone you know is in immediate danger, call the police on 000. Nicola Henry, Professor, Australian Research Council Future Fellow, & Deputy Director, Social Equity Research Centre, RMIT University This article is republished from The Conversation under a Creative Commons license. Read the original article. |

Chinese shopping app Temu suspended in Vietnam: state media (2025-06-14T12:25:00+05:30)

HANOI - Chinese shopping app Temu has been forced to suspend its services in Vietnam after it failed to register with authorities, state media said on Thursday. Goods ordered on Temu were no longer being cleared through customs in Vietnam, state media reported, after the company missed an end-of-November deadline to register with the ministry of industry and trade. It was not clear when or if Temu would be able to resume business. On Temu's app, Vietnamese has been removed as an interface language. Users now have the option to select from English, Chinese and French. The announcement comes after the ministry raised concerns in October over the stunningly low prices on the online marketplace and their impact on Vietnamese producers. A spokesperson for Temu told AFP that they were working with Vietnamese authorities to register their business. "We have submitted all required documents for the registration," the spokesperson said. Temu has sucked in consumers across the world with its low prices and all-powerful algorithms. Since it began operations in Vietnam in October, it has caught the eye of Vietnamese consumers with discounts of up to 90 percent and free shipping, according to state media. But the month of its launch, the ministry raised concerns about the "unusually low prices of its goods, which may impact domestically produced products", according to the official Vietnam News Agency. "It is unclear whether they (the goods) are authentic," VNA cited the ministry as saying. Temu is also one of the fastest-growing apps in Europe, but the EU has hit the shopping platform with a probe over concerns the site is doing too little to stop the sale of illegal products.In April, regulators in South Korea opened an investigation into Temu on suspicion of unfair practices including false advertising and poor product quality. Chinese shopping app Temu suspended in Vietnam: state media

|

Cambodia launches mobile app to combat violence against women, girls (2025-06-14T12:24:00+05:30)

|

Some cybersecurity apps could be worse for privacy than nothing at all (2025-06-09T13:06:00+05:30)

|

It’s been a busy few weeks for cybersecurity researchers and reporters. There was the Facebook hack, the Google plus data breach, and allegations that the Chinese government implanted spying chips in hardware components. In the midst of all this, some other important news was overlooked. In early September, Apple removed several Trend Micro anti-malware tools from the Mac app store after they were found to be collecting unnecessary personal information from users, such as browser history. Trend Micro has now removed this function from the apps. It’s a good reminder that not all security apps will make your online movements more secure – and, in some cases, they could be worse than doing nothing at all. It’s wise to do your due diligence before you download that ad-blocker or VPN – read on for some tips. Security appsThere are range of tools people use to protect themselves from cyber threats:

Know the risksThere are multiple dangers in using these kinds of security software, especially without the proper background knowledge. The risks include: Accessing unnecessary dataMany security tools request access to your personal information. In many cases, they need to do this to protect your device. For example, antivirus software requires information such as browser history, personal files, and unique identifiers to function. But in some cases, tools request more access than they need for functionality. This was the case with the Trend Micro apps. Creating a false sense of securityIt makes sense that if you download a security app, you believe your online data is more secure. But sometimes mobile security tools don’t provide security at the expected levels, or don’t provide the claimed services at all. If you think you can install a state-of-the-art mobile malware detection tool and then take risks online, you are mistaken. For example, a 2017 study showed it was not hard to create malware that can bypass 95% of commercial Android antivirus tools. Another study showed that 18% of mobile VPN apps did not encrypt user traffic at all. And if you are using Tor, there are many mistakes you can make that will compromise your anonymity and privacy – especially if you are not familiar with the Tor setup and try to modify its configurations. Lately, there have been reports of fake antivirus software, which open backdoors for spyware, ransomware and adware, occupying the top spots on the app charts. Earlier this year it was reported that 20 million Google Chrome users had downloaded fake ad-blocker extensions. Software going rogueNumerous free – or paid – security software is available in app stores created by enthusiastic individual developers or small companies. While this software can provide handy features, they can be poorly maintained. More importantly, they can be hijacked or bought by attackers, and then used to harvest personal information or propagate malware. This mainly happens in the case of browser extensions. Know what you’re giving awayThe table below shows what sort of personal data are being requested by the top-10 antivirus, app-locker and ad-blocking apps in the Android app store. As you can see, antivirus tools have access to almost all the data stored in the mobile phone. That doesn’t necessarily mean any of these apps are doing anything bad, but it’s worth noting just how much personal information we are entrusting to these apps without knowing much about them. How to be saferFollow these pointers to do a better job of keeping your smart devices secure: Consider whether you need a security appIf you stick to the official apps stores, install few apps, and browse only a routine set of websites, you probably don’t need extra security software. Instead, simply stick to the security guidelines provided by the manufacturer, be diligent about updating your operating system, and don’t click links from untrusted sources. If you do, use antivirus softwareBut before you select one, read product descriptions and online reviews. Stick to solutions from well-known vendors. Find out what it does, and most importantly what it doesn’t do. Then read the permissions it requests and see whether they make sense. Once installed, update the software as required. Be careful with other security toolsOnly install other security tools, such as ad-blockers, app-lockers and VPN clients, if it is absolutely necessary and you trust the developer. The returns from such software can be minimal when compared with the associated risks. Suranga Seneviratne, Lecturer - Security, University of Sydney This article is republished from The Conversation under a Creative Commons license. Read the original article. |

Apps that help parents protect kids from cybercrime may be unsafe too (2025-05-30T12:25:00+05:30)

|

Children, like adults, are spending more time online. At home and school pre-schoolers now use an array of apps and platforms to learn, play and be entertained. While there are reported benefits, including learning through exploration, many parents are still concerned about screen time, cybersafety and internet addiction. An increasingly popular technical solution is parental control apps. These enable parents to monitor, filter and restrict children’s online interactions and experiences. Parental control apps that work by blocking dangerous or explicit content can be marketed as “taking the battle out of screen time” and giving parents “peace of mind”. But such a quick fix is inadequate when addressing the complicated reasons behind screen time. Much worse though, the apps expose users to privacy and other safety issues most people aren’t aware of. What apps do parents use?Research by Australia’s eSafety Commission shows 4% of preschoolers’ parents use parental control apps. This increases to 7% of parents with older children and 8% of parents with teenagers. Global trends suggest these figures are bound to rise. Parents download parental control apps onto a child’s mobile phone, laptop or tablet. Most parental control apps enable parents to monitor or restrict inappropriate online content from wherever they are. They provide parents with insights into which sites their child has visited and for how long, as well as who they have interacted with. Qustudio, for example, claims to keep children “safer from cyber threats” by filtering inappropriate content, setting time limits on use and even monitoring text messages. Boomerang, another popular parental control app, enables parents to set time limits per day, per app. Why they may not be safeParental control apps need many permissions to access particular systems and functions on devices. 80% of parental control apps request access to location, contacts and storage. While these permissions help the apps carry out detailed monitoring, some of them may not be necessary for the app to function as described. For instance, several apps designed to monitor children’s online activity ask for permissions such as “read calendar”, “read contacts” and “record audio” — none of which are justified in the app description or the privacy policy. Many are considered “dangerous permissions”, which means they are used to access information that could affect the user’s privacy and make their device more vulnerable to attack. For example, Boomerang requests more than 91 permissions, 16 of which are considered “dangerous”. The permission “access fine location” for instance, allows the app to access the precise geographic location of the user. The “read phone state” allows the app to know your phone number, network information and status of outgoing calls. It’s not just the apps that get that information. Many of these apps embed data hungry third-party software development kits (SDKs). SDKs are a set of software tools and programs used by developers to save them from tedious coding. However, some SDKs can make the app developers money from collecting personally identifiable information, such as name, location and contacts from children and parents. Because third-party SDKs are developed by a company separate from the original app, they have different protocols around data sharing and privacy. Yet any permissions sought by the host app are also inherited by third-party SDKs. The Google Play Store, which is used for Android phones, does not force developers to explain to users whether it has embedded third-party SDKs, so users cannot make an informed decision when they consent to the terms and conditions. Apple’s App Store is more transparent. Developers must state if their apps use third-party code and whether the information collected is used to track them or is linked to their identity or device. Apple has removed a number of parental control apps from the App Store due to their invasive features. Many popular parental control apps in the Google Play Store have extensive security and privacy vulnerabilities due to SDKs. For example, SDKs for Google Ads, Google Firebase and Google Analytics are present in over 50% of parental control apps in the Google Play Store, while the Facebook SDK is present in 43%. A US study focusing on whether parental control apps complied with laws to protect the personal data of children under 13 found roughly 57% of these apps were in violation of the law.

Not all parental control apps request dangerous permissions. The Safer Kid app, for example, does not request any dangerous permissions but costs US$200 per year. Why should I worry?Personal data has become a valuable commodity in the digital economy. Huge volumes of data are generated from our digital engagements and traded by data brokers (who collect information about users to sell to other companies and/or individuals) and tech companies. The value is not in a singular data point, but the creation of huge datasets that can be processed to make predictions about individual behaviours. While this is a problem for all users, it is particularly problematic for children. Children are thought to be more vulnerable to online threats and persuasion than adults due to more limited digital skills and less awareness of online risks. Data-driven advertising establishes habits and taste preferences in young children, positioning them as consumers by exploiting insecurities and using peer influence. Parental control apps have also been targeted by attackers due to their insecurities, exposing children’s personal information. There are better ways to reduce screen timeIt is also questionable whether parental control apps are worthwhile. Research suggests issues of screen time and cybercrime are best managed through helping children self-regulate and reflect on their online behaviour. Rather than policing time limits for screen use, parents could focus on the content, context and connections their child is making. Parents could encourage their children to talk to them about what happens online, to help make them more aware of risk and what to do about it. Restrictive approaches also reduce opportunities for kids’ growth and beneficial online activity. Unsurprisingly, children report parental control apps are overly invasive, negatively impacting their relationships with parents. Instead of a technical “quick-fix,” we need an educational response that is ethical, sustainable and builds young people’s digital agency. Children will not be under their parents’ surveillance forever, so we need to help them prepare for online challenges and risks. Luci Pangrazio, Postdoctoral Research Fellow, Deakin University This article is republished from The Conversation under a Creative Commons license. Read the original article. |

Many parenting apps are reinforcing the gender divide (2024-12-13T11:39:00+05:30)

Almost every day, a smartphone app emerges offering some new and exciting functionality. But it’s come to my attention that many of these apps are continuing an old trend: they are purveyors of gender-based marketing. So although in many ways we are making attempts to decrease the gender divide, it would seem that these new technologies are actually doing just the opposite. Search through the list of apps on the market and it’s obvious that, while the responsibility of parenting is increasingly shared between partners, app developers continue to ignore this reality. Compared to the hundreds of apps for pregnant women, very few apps are marketed to their partners (male or female). Perhaps more problematically, apps specifically developed for male partners are generally poorly executed. They lack detailed information most interested partners would like, while emphasising gendered stereotypes. Apps for mums and apps for dadsMany of these apps work to design a male partner’s role in pregnancy, in such a way that men are constructed as being barely capable of playing a supporting role and needing all the help they can get. They typically include humorous, simplified information and reminders about what being a good partner involves. Together, these features render fathering an issue of keeping up appearances and impressing mum, rather than a serious attempt at engaging in parenting. Another concern is that these apps may encourage men to monitor or survey their partners’ activities. Some apps suggest that partners should keep an eye on what their pregnant partner ingests for the sake of their baby’s health. The m Pregnancy – for Men with Pregnant Women app includes “information about what your partner cannot eat” and others include information on what activities their partners should avoid (such as heavy lifting).

This same app for fathers-to-be prides itself on its particular take on a common feature of pregnancy apps – the foetus size comparison. Rather than comparing the foetus to a piece of fruit or vegetable as many apps do, this app for Apple users describes the size of the developing baby “in terms that men understand e.g. similar to the size of a football, or a bottle of beer”. These features are common to many support resources for men with pregnant partners (including apps, guidebooks and magazines). This suggests that although parenting and fathering appear to be changing in terms of policy, sociocultural practices and expectations – including more involved dads and more dads as primary carers – the mechanisms and devices to support these changes, including apps, are lagging behind. Harmful and outdated stereotypesMy area of research is pregnancy and motherhood and apps offer a great many conveniences for pregnant women and new mums. They can help to keep track of particular activities, facilitate multitasking while breastfeeding and even sing your baby a calming lullaby. What’s more, they provide tidbits of information that can be easily accessed on your smartphone, so you don’t have to trawl through a pregnancy guidebook for advice or facts. But these apps harmfully perpetuate the notion that mothering is essentialised: part of a woman’s nature or instinct. If we continue to believe this, and if apps continue to promote the idea that men have no innate parental knowledge or intuition, then we will all suffer as a result. Apps such as m Pregnancy and Android’s New Dad – Pregnancy For Dads also appeal to activities traditionally viewed as masculine – a gender-biased misconception in itself.

Apps for men provide information on how to prepare a nursery or how to deal with finance or insurance. This goes beyond pregnancy apps. Do a quick Google search for “apps for busy mums” and “apps for busy dads” and it’s easy to see that the marketing of apps to parents draws on numerous gender stereotypes. This is common to almost every article in the vein of the best apps for mums. These tend to feature apps linked to a number of key ideas. First, there’s the focus on body image apps: think calorie counters, dieting apps such as Calorie Counter and Diet Tracker, exercise apps such as Lumen Trails Organizer+ and online shopping apps such as Makeup Alley. Second, the apps frequently reinforce misguided assumptions that the mother bears responsibility for household and family management. For instance, such apps might provide functionality for making to-do lists and shopping lists (Grocery Gadget), and offer advice on cooking (Emeals) and cleaning (Homeroutines and Tody). Third, there are apps that focus on health, including Feeding Your Kids, Sudden Infant Death Syndrome apps (SIDS and Kids Safe Sleeping) and vaccination reminder apps. Considering the wealth of research focusing on women’s responsibilities for their children’s health, it is not surprising that women are expected to use apps to supposedly ease the burden of responsibility. The lists don’t helpIn lists for the best apps for busy dads, there are other common themes. Almost always included in these are suggestions for ways in which dads can keep their children entertained. What’s worrying is that most also include suggestions for keeping dad entertained, including sports apps and home renovation apps (such as the ESPN Score Centre and Home Depot apps). When apps for housework were included, the screenshots almost always promote 1950s traditions – for example, dad taking out the rubbish and mum doing cooking and cleaning.

If apps are gendered, then it’s hardly a surprise to find many apps are targeted towards women improving their post-baby bodies and embracing their potential “yummy mummy” status. Research has shown a woman’s relationship with her body can be bound to expectations to conform to dominant, commodified images of beauty. It’s no wonder apps present a new tool for marketing ideals of femininity. I acknowledge that apps can be used to have fun or satisfy curiosity – is my baby the size of a peach or a rockmelon today? – but it is also clear that apps offer great potential for empowerment and the expansion of knowledge. Promoting equality in apps

What I am suggesting is that app developers – and, even more importantly, those who are marketing new apps – should acknowledge the overlapping of technology and subjectivity. There is a need to actively engage with the performative potential of apps in order to create technologies that encourage more equality in parenting and family life. There is no need to create gendered apps when it is clear that not all men want to engage with only masculine-defined activities and not all women want to engage with so-called feminine activities. If app developers come to recognise this and make their apps more gender-neutral they may be able to reach a much broader market, and may actually begin to act as tools of learning, convenience and teamwork. Sophia Johnson, Research Assistant, Sociology and Social Policy, University of Sydney This article is republished from The Conversation under a Creative Commons license. Read the original article. |

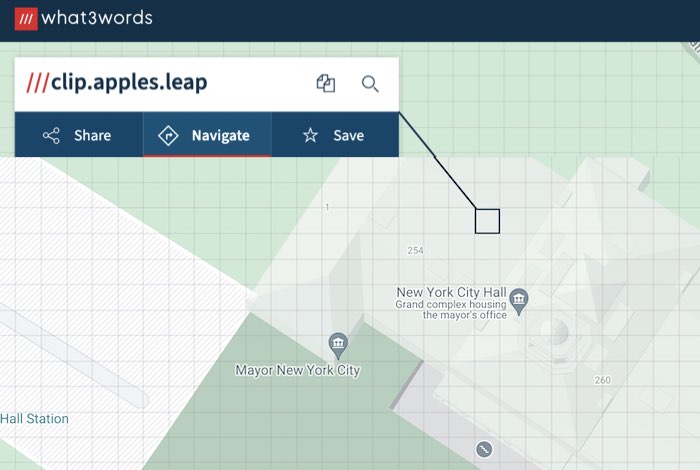

Woman Who Fell 60-feet Down a Mountain Was Saved by What3words Location App (2024-12-06T12:14:00+05:30)

Sarah Robert in the Alps – SWNS Sarah Robert in the Alps – SWNSA hiker says he was able to help save his aunt’s life because he had the what3words app on his phone. Ed Farnworth was hiking in Switzerland and his 59-year-old Aunt Sarah when she fell 60-feet down a mountain and suffered serious injuries. The two were in the woods, far away from flat ground, and there were no landmarks for Ed to tell the emergency services where they were. Luckily, he remembered he had the what3words app on his phone which provides users with a unique three-word code that reveals their precise location. The emergency personnel knew all about what3words—and the address ///crabmeat.hers.froze showed them the exact spot where Sarah landed. The 31-year-old hiker from Manchester, England, regularly visits his auntie who now lives in Switzerland and hikes often. They had both researched the trail beforehand. “The weather was great and we were having an amazing day,” recalled Ed. “I was leading the way in front of her on our decent down the mountain when I turned around and saw she had started to lose her balance. “She was very close to the edge and started to fall, she had a backpack on, so her balance was all out of sync. “She tumbled over the edge and from then on, she didn’t stop tumbling down. She was hysterical, shouting my name and shouting help. Ed saw Sarah hitting her head on a tree extremely hard. “It was a dense whack and then she stopped making any noise. “I lost sight of her as I tried to make my way down to her, I’d never dealt with anything like this before. I found her on her front unconscious, I thought that she was dead at this point. “She had a huge cut in her forehead, an open wound and her face was purple already. Her arm was badly broken, and she was covered in blood.” Ed knew he had to remain calm and call for help, but panicked that he had no idea where they were—until he remembered the what3words app.  What3words app screenshot What3words app screenshot“I had downloaded what3words a few years ago, I remember downloading it because I go on all these hiking trips, I had genuinely forgotten about it. Looking back, Ed says the free app saved Sarah’s life as It only took 25 minutes for the helicopter to find the pair. While waiting, Sarah was revived and started saying she was cold. “She was visibly concussed, she didn’t know who I was to start with, and she was asking me the same question over and over. “She was crying out for her husband and daughter, and she still can’t remember a thing from what happened.” Without the app, Ed would have had to potentially leave to find help or shout for help, both of which could have made my Sarah even more distressed. “It sped the whole process up and stopped any further injuries occurring, I am super super grateful that I had that app.” Sarah suffered a broken arm, gashed forehead, and was severely concussed in the fall. Her face was completely misshapen and she remained in hospital for over a week, finally going home in a neck brace, with a metal plate in her arm. Sarah still remains passionate about hiking, and Ed wants to make sure every hiker is aware of how important it is to download What3words, which divides the world into 10ft squares—each one named by a unique combination of three words.  what3words what3words“People having the app is reassuring to me,” said Ed. “I’m taking a group of people from work hiking tomorrow and I’ll make sure they all have what3words.” The app addresses are accepted by over 4,800 dispatchers who answer 911 calls in the US. Emergency services in the UK are recommending the free app, because “it saves lives.” What3words is also integrated into the navigation systems of many Mercedes-Benz, Jaguar, Land Rover, Subaru and Mitsubishi vehicles in the US, making it easy for drivers to enter the 3-word addresses straight into their car’s navigation system. Sarah Robert still has no recollection of the fall itself, but is thankful for the outcome. “What3words helped speed up the whole process for which I am extremely grateful. Without it, I don’t see how I would have got the help I needed and things may have ended much worse.“I had never heard of or used the app before my nephew explained it to me, but I’m so thankful he did.” Woman Who Fell 60-feet Down a Mountain Was Saved by What3words Location App

|

The Apple and Google app store monopoly could end (2024-09-24T11:49:00+05:30)

Rules are regulations may be introduced to cut out the monopoly the two main players have over app developers Greig Paul: The Conversation: New rules on mobile app stores could trigger a wave of creative, cheaper apps with more privacy options for users. Every budding developer dreams of creating an app that goes viral and makes lots of money overnight. The Angry Birds game became a worldwide phenomenon within weeks when it launched in 2009 and made US$10 million in its first year. But, overall, the numbers make it clear that mobile apps don’t guarantee wealth. A 2021 study showed only 0.5% of consumer apps succeed commercially. Developers have to jostle for attention among the almost 3 million apps and games on Google Play and 4.5 million apps and games on the Apple store. On Apple’s iPhone and iPad platforms, the App Store is the only way to distribute apps. Until recently, Apple and Google’s stores charged a 30% commission fee. But both halved it for most independent app developers and small businesses after lawsuits such as in 2020 when video games company Epic Games claimed Apple had an illegal monopoly of the market. Epic Games lost, but Apple was subject to App store changes that are on hold. Both Epic Games and Apple are appealing. Epic Games has filed a similar case against Google, which is set to go to trial in 2023. App stores set the rules on privacy, security and even what types of apps can be made. Third-party stores could set different rules which might be more relaxed and allow developers to keep more of the money from apps they sell. You have been Sherlocked Independent developers say they are sometimes being “Sherlocked” by Google and Apple. They develop an app, and not long afterward the platforms embed the app’s features in the operating system itself, killing the developer’s product. FlickType was developed as a third-party keyboard for iPhones and Apple Watches in 2019. Shortly afterward, Apple apparently told the developer that keyboards for the Apple Watch were not allowed, they announced the feature themselves. It can take between three and nine months to develop one app and can cost between $40,000 and $300,000 to build a minimum viable product. Some apps take much longer than this to develop. In 2021 a group of UK-based developers filed a £1.5 billion collective action suit against Apple over its store fees. The case will be heard in the UK. The European Commission told Apple it had abused its position and distorted competition in the music streaming industry and its restrictions on app developers prevent them from telling users about cheaper alternative apps. For instance, when Apple builds a music app, rivals such as Spotify argue this is unfair. They have to pay 15% or 30% of their revenues to Apple, their rival, which operates the store platform. Until recently, Apple prevented Spotify from telling users about cheaper options, like by subscribing via the service’s website. A report from the UK’s Competition and Markets Authority highlighted concerns that the tech giants were creating barriers to innovation and competition. Their full market study is due to report back in June 2022. The UK government has pledged to introduce new laws “when parliamentary time allows.” Alternative app stores The EU’s Digital Markets Act could be in force by Spring 2023. The legislation is designed to open up mobile platforms by allowing users to install apps from alternative stores, and ensure app store providers don’t favor their own products or services over third-party developers’ offerings. In February 2022 a US senate panel approved a bill that aims to rein in app stores. It is possible to install apps from other niche stores on Android hardware – such as the F-Droid store for open source apps. But the Play Store is available on almost every Android phone by default, meaning the apps available on it can reach a much larger number of users. Both Apple and Google’s app review processes (which looks at developers’ apps before making them available) have been heavily criticized for their lack of transparency, consistency and general inequality. Independent developers have no real leverage against international billion-dollar companies. Google has been criticized for failing to provide meaningful clarification when it removes apps from its store. Users’ privacy Apple expressed security and privacy concerns about allowing apps from other stores on its devices. App store review processes can try to ensure that apps follow their privacy policies. Most users don’t read these however, and apps can already access and share a lot more data than users realize. Third-party app stores are likely to create a trade-off between user freedom and user safety. Some users may prefer Apple and Google’s approach to privacy. Others may prefer a more open experience, where they can install apps from smaller independent developers, who can develop their apps without having to jump through the large app stores’ hoops. The fact is that it’s possible to give users this choice – evidence from lawsuits shows that Apple originally planned to support running apps from outside its app store. The Digital Markets Act might force Apple to reconsider. The DMA won’t deliver results for users and developers unless it is properly implemented. The European Commission looks set to become a dedicated regulator for the first time. This will take time though, and the commission will need to grow a team large enough to provide meaningful oversight and enforcement. Greig Paul, Lead Mobile Networks and Security Engineer, University of Strathclyde.This article is republished from The Conversation under a Creative Commons license. The Apple and Google app store monopoly could end | MorungExpress | morungexpress.com

|